In the previous post we described the benefit of using Application Automation in conjunction with Network Analytics in the Data Centre, a Public Cloud or both. We described two solutions from Cisco that offer great value individually, and we also explained how they can multiply their power when used together in an integrated way.

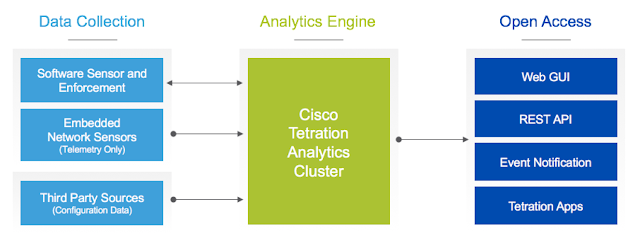

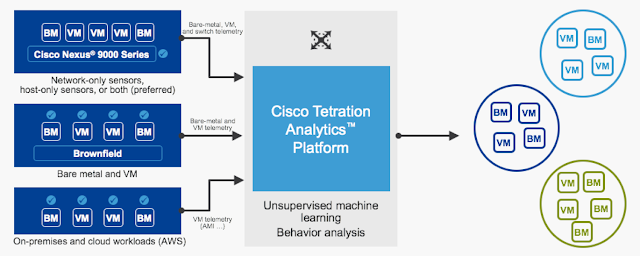

This post describes a lab activity that we implemented to demonstrate the integration of Cisco Tetration (network analytics) with Cisco CloudCenter (application deployment and cloud brokerage), creating a solution that combines deep insight into the application architecture and into the network flows.

The Application Profile managed by CloudCenter is the blueprint that defines the automated deployment of a software application in the cloud (public and private). We add information in the Application Profile to automate the configuration of the Tetration Analytics components during the deployment of the application.

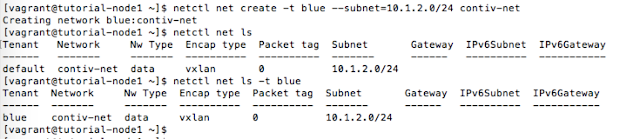

Deploy a new (or update an existing) Application Profile with Tetration support enabled

Intent of the lab:

To modify an existing Application Profile or model a new one so that Tetration is automatically configured to collect telemetry, leveraging also the automated installation of sensors.

Execution:

A Tetration Injector service is added to the application tiers to create a scope, define dedicated sensor profiles and intents, and automatically generate an application workspace to render the Application Dependency Mapping for each deployed application.

Step 1 – Edit an existing Application Profile

Step 2 – Drag the Tetration Injector service into the Topology Modeler

Step 3 – Automate the deployment of the app: select a Tetration sensor type to be added

Tetration sensors can be of two types: Deep Visibility and Deep Visibility with Policy Enforcement. The Tetration Injector service allows you to select the type you want to deploy for this application. The deployment name will be reflected in the Tetration scope and application name.

In addition to deploying the sensors, the Tetration injector configures the target Tetration cluster and logs all configuration actions leveraging the CloudCenter centralized logging capabilities.

The activity is executed by the CCO (CloudCenter Orchestrator):

Step 4 – New resources created on the Tetration cluster

After the user has deployed the application from the CloudCenter self-service catalog, you can go to the Tetration user interface and verify that everything has been created to identify the packet flow that will come from the new application:

Tetration Analytics – Application Dependency Mapping

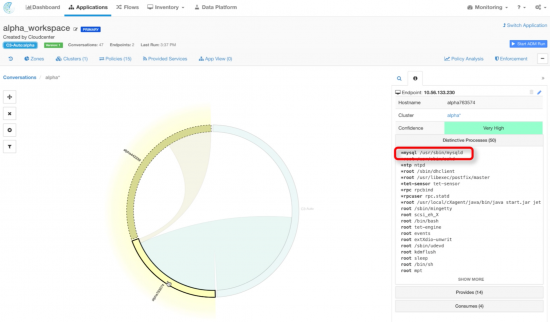

An application workspace has been created automatically for the deployed application, through the Tetration API: it shows the communication among all the endpoints and the processes in the operating system that generate and receive the network flows.

The following interactive maps are generated as soon as the network packets, captured by the sensors when the application is used, are processed in the Tetration cluster.

The Cisco Tetration Analytics machine learning algorithms grouped the applications based on distinctive processes and flows.

The figure below shows how the distinctive process view looks like for the web tier:

The distinctive process view for the database tier:

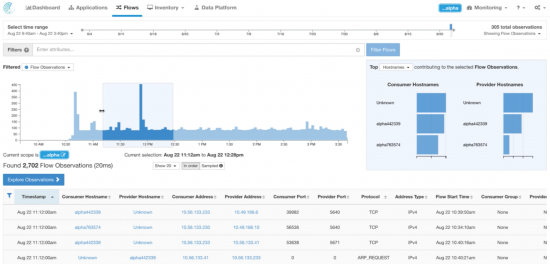

Flow search on the deployed application:

Detail of a specific flow from the Web tier to the DB tier:

Terminate the application: De-provisioning

When you de-provision the software application as part of the lifecycle managed by CloudCenter (with one click), the following cleanup actions will be managed by the orchestrator automatically:

- Turn off and delete VMs in the cloud, including the software sensors

- Delete the application information in Tetration

- Clear all configuration items and scopes

Conclusion

The combined use of automation (deploying both the applications and the sensors and configuring the context in the analytics platform) as well as the telemetry data that are processed by Tetration help in building a security model based on zero-trust policies.

The following use cases enable a powerful solution thanks to the integrated deployment:

- Get communication visibility across different application components

- Implement consistent network segmentation

- Migrate applications across data center infrastructure

- Unify systems after mergers and acquisitions

- Move applications to cloud-based services

Automation limits the manual tasks of configuring, collecting data, analyzing and investigating. It makes security more pervasive, predictive and even improves your reaction capability if a problem is detected.

Both platforms are constantly evolving and the RESTful API approach enables extreme customization in order to accommodate your business needs and implement features as they get released.

The upcoming Cisco Tetration Analytics release – 2.1.1 – will bring new data ingestion modes like ERSPAN, Netflow and neighborhood graphs to validate and assure policy intent on software sensors.

You can learn more from the sources linked in this post, but feel free to add your comments here or contact us to get a direct support if you want to evaluate how this solution applies to your business and technical requirements.

Credits

This post is co-authored with a colleague of mine, Riccardo Tortorici.

He is the real geek and he created the excellent lab for the integration that we describe here, I just took notes from his work.