Introduction

There a big (justified) hype around containers and microservices.

Indeed, many people speak about the subject but few have implemented a real project.

There is also a lot of excellent resources on the web, so there is no need for my additional contribution.

I just want to offer my few readers another proof that a great solution exists for containers networking, and it works well.

You will find evidences in this post and pointers to resources and tutorials.

I will explain it in very basic terms, as I did for Cisco ACI and SDN, because I’m not talking to network specialists (you know I’m not either) but to software developers and designers.

Most of the content here is reused from my sessions at Codemotion 2017 in Rome and Amsterdam (you can see the recording on youtube).

Most of the content here is reused from my sessions at Codemotion 2017 in Rome and Amsterdam (you can see the recording on youtube).

Containers Networking

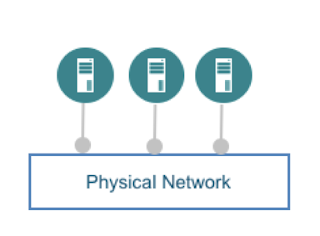

When the world moved from bare metal servers to virtual machines, virtual networks were also created and added great value (plus some need for management).

|

| Initially networking was simple |

Of course virtual networks make the life of developers and servers managers easier, but they also add complexity for network managers: now there are two distinct networks that need to be managed and integrated.

You cannot simply believe that an overlay network runs on a physical dumb pipe with infinite bandwidth, zero latency and no need for end to end troubleshooting.

|

| Virtual Machines connected to an overlay network |

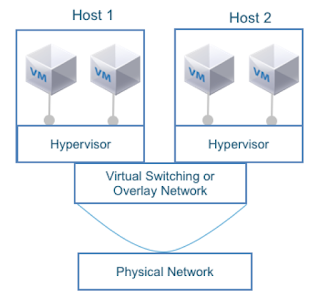

With the advent of containers, their virtual networking layered on top of the VM virtual network (the majority of containers run inside VM for a number of reasons), though there are good examples of container runtime on physical hosts.

So now you have 3 network layers stacked on top of each other, and a need to manage the network end to end that makes your work even more complex.

|

| Containers inside VM: many layers of overlay networks |

This increased abstraction creates some issues when you try to leverage the value of resources in the physical environment:

- connectivity: it's difficult to insert network services, like load balancers and firewalls, in the data path of microservices (regardless the virtual or physical nature of the appliances).

- performances: every overlay tier brings its own encapsulation (e.g. vxlan). Encapsulation over encapsulation over encapsulation starts penalizing the performances... just a little ;-)

- hardware integration: some advanced features of your network (performances optimization, security) cannot be leveraged

Do not despair: we will see that a solution exists for this mess.

Do not despair: we will see that a solution exists for this mess.

Microservices Networking

This short paragraph describes the existing implementation of the networking layer inside the containers runtime.

Generally it is based on a pluggable architecture, so that you can use a plugin that is delegated by the container engine to manage the container's traffic. You can choose among a number of good solutions from the open source community, including the default implementation from Docker.

Minimally the networking layer provides:

- IP Connectivity in Container’s Network Namespace

- IPAM, and Network Device Creation (eth0)

- Route Advertisement or Host NAT for external connectivity

|

| The networking for containers |

The Container Network Model (CNM)

Proposed by Docker to provide networking abstractions/API for container networking.

It is based on the concept of a Sandbox that contains configuration of a container's network stack (Linux network namespace).

An endpoint is container's interface into a network (a couple of virtual ethernet interfaces).

A network is collection of arbitrary endpoints that can communicate.

A container can belong to multiple endpoints (and therefore multiple networks).

CNM allows for co-existence of multiple drivers, with a network managed by one driver

Provides Driver APIs for IPAM and for Endpoint creation/deletion.

- IPAM Driver APIs: Create/Delete Pool, Allocate/Free IP Address Network

- Driver APIs: Network Create/Delete, Endpoint Create/Delete/Join/Leave

Used by docker engine, docker swarm, and docker compose.

Also works with other schedulers that runs standard containers e.g. Nomad or Mesos.

|

Container Network Model |

The Container Network Interface (CNI)

Proposed by CoreOS as part of appc specification, used also by Kubernetes.

Common interface between container run time and network plugin.

Gives driver freedom to manipulate network namespace.

Network described by JSON configuration.

Plugins support two commands:

- Add Container to Network

- Remove Container from Network

|

Container Network Interface |

Many good implementations of the models above are available on the web.

You can pick one to complement the default implementation with a more sophisticated solution and benefit from better features.

It looks so easy on my laptop. Why is it complex?

When a developer sets up the environment on its laptop, everything is simple.

You test your code and the infrastructure just works (you can also enjoy managing... the infrastructure as code).

No issues with performances, security, bandwidth, logs, conflicts on resources (ip address, vlan, names…).

But when you move to an integration test environment, or to a production environment, it’s no longer that easy.

IT administrators and the operations team are well aware of the need for stability, security, multi tenancy and other enterprise grade features.

So not all solutions are equal, especially for networking. Let's discuss their impact on Sally and Mike:

Sally (software developer) - she expects:

Develop and test fast

Agility and Elasticity

Does not care about other users

Mike (IT Manager) - he cares for:

Manage infrastructure

Stability and Security

Isolation and Compliance

These conflicting goals and priorities challenge the collaboration and the possibility to easily adopt a DevOps approach.

A possible solution is a Policy-based Container Networking.

Policy based management is simpler thanks to Declarative Tags (used instead of complex commands syntax), and it is faster because you manage Groups of resources instead of single objects (think of the cattle vs pets example).

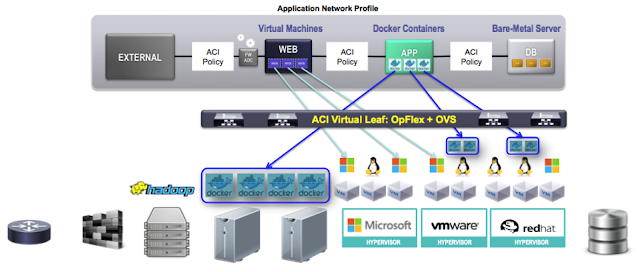

What is Contiv

Contiv unifies containers, VMs, and bare metal servers with a single networking fabric, allowing container networks to be addressable from VM and bare-metal network endpoints. Contiv combines strong network performance, support for industry-leading hardware, and an application-oriented policy that can move across networks together with the application.

Contiv's goal is to manage the "operational intent" of your deployment in a declarative way, as you generally do for the "application intent" of your microservices. This allows for a true infrastructure as code management and easy implementation of DevOps practices.

Contiv provides an IP address per container and eliminates the need for host-based port NAT. It works with different kinds of networks like pure layer 3 networks, overlay networks, and layer 2 networks, and provides the same virtual network view to containers regardless of the underlying technology.

Contiv works with all major schedulers like Kubernetes, Docker Swarm, Mesos and Nomad. These schedulers provide compute resources to your containers and Contiv provides networking to them. Contiv supports both CNM (Docker networking Architecture) and CNI (CoreOS and Kubernetes networking architecture).

Contiv has L2, L3 (BGP), Overlay (VXLAN) and ACI modes. It has built in east-west service load balancing. Contiv also provides traffic isolation through control and data traffic.

It manages global resources: IPAM, VLAN/VXLAN pools.

Contiv Architecture

Contiv is made of a master node and an agent that runs on every host of your server farm:

The master node(s) offer tools to manipulate Contiv objects. It is called Netmaster and implements CRUD (create, read, update, delete) operations using a REST interface. It is expected to be used by infra/ops teams and offers RBAC (role based access control).

The host agent (Netplugin) implements cluster-wide network and policy enforcement. It is stateless: very useful in case of a node failure/restart and upgrade.

A command line utility (that is a client of the master's REST API) is provided: it's named netctl.

|

| Contiv's architecture |

Examples

Learning Contiv is very easy: from the Contiv website there is a great tutorial that you can download and run locally.

For your convenience, I executed it on my computer and copied some screenshots here, with my comments to explain it step by step.

First, let's look at normal docker networks (without Contiv) and how you create a new container and connect it to the default network:

|

| Networks in Docker |

You can inspect the virtual bridge (in the linux server) that is managed by Docker: look at the IPAM section of the configuration and its Subnet, then at the vanilla-c container and its ip address.

|

| How Docker sees its networks |

You can also look at the network config from within the container:

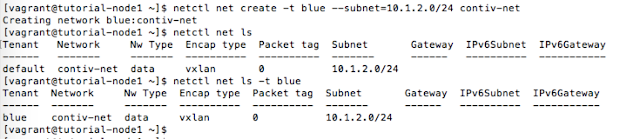

Now we want to create a new network with Contiv, using its netctl command line interface:

|

| Contiv's netctl command line interface |

Here you can see how Docker lists and uses a Contiv network:

Look at the IPAM section, the name of the Driver, the name of the network and of the tenant:

We now connect a new container to the contiv-net network as it is seen by Docker: the command is identical when you use a network created by Contiv.

Multi tenancy

You can create a new Tenant using the netctl tenant create command:

|

| Creating tenants in Contiv |

A Tenant will have its own networks, that can overlap other tenants' network names and even their subnets: in the example below, the two networks are completely isolated and the default tenant and the blue tenant ignore each other - even though the two networks have the same name and use the same subnet.

Everything works as if the other network did not exist (look at the "-t blue" argument in the commands).

|

| Two different networks, with identical name and subnet |

Let’s attach a new container to the contiv-net network in the blue tenant (the tenant name is explicitly used in the command, to specify the tenant's network):

All the containers connected to this network will communicate. The network extends all across the cluster and benefits of all the features of the Contiv runtime (see the website for a complete description).

The policy model: working with Groups

Contiv provides a way to apply isolation policies among containers groups (regardless of the tenants, eventually within the tenants). To do that we create a simple policy called db-policy, then we associate the policy to a group (db-group, that will contain all the containers that need to be treated the same) and add some rules to the policy to define which ports are allowed.

|

| Creating a policy in Contiv |

(click on the images to zoom in)

|

| Adding rules to a policy |

Finally, we associate the policy with a group (a group is an arbitrary collection of containers, e.g. a tier for a microservice) and then run some containers that belong to db-group:

|

| Creating a group |

The policy named db-policy (defining, in this case, what ports are open and closed) is now applied to all the 3 containers: managing many end points as a single object makes it easy and fast, just think about auto-scaling (especially when integrated with Swarm, Kubernetes, etc.).

The tutorial shows many other interesting features in Contiv, but I don't want to make this post too long :-)

Features that make Contiv the best solution for microservices networking

- Support for grouping applications or applications' components.

- Easy scale-out: instances of containerized applications are grouped together and managed consistently.

- Policies are specified on a micro-service tier, rather than on individual container workloads.

- Efficient forwarding between microservice tiers.

- Contiv allows for a fixed VIP (DNS published) for a micro-service

- Containers within the micro-services can come and go fast, as resource managers auto-scale them, but policies are already there... waiting for them.

- Containers' IP addresses are immediately mapped to the service IP for east-west traffic.

- Contiv eliminates the single point of forwarding (proxy) between micro-service tiers.

- Application visibility is provided at the services level (across the cluster).

- Performances are great (see references below).

- It mirrors the policy model that made Cisco ACI an easy and efficient solution for SDN, regardless the availability of an ACI fabric (Contiv also works with other hw and even with all-virtual networks).

I really invite you to have a look and test it yourself using the tutorial.

It's easy and not invasive at all, seeing is believing.